Authenticity & AI Detection

Image tracking technology

October 16, 2023

Incoming 2024 EU and US Elections Under Deep Fakes Empire

⇥ QUICK READ

<div class="hs-cta-embed hs-cta-simple-placeholder hs-cta-embed-127250935564"

style="max-width:100%; max-height:100%; width:170px;height:300px" data-hubspot-wrapper-cta-id="127250935564">

<a href="https://cta-service-cms2.hubspot.com/web-interactives/public/v1/track/redirect?encryptedPayload=AVxigLJG780n6Gaolzn4nkLXvLqaNiOKQAS72pJmbhN8fC5Aq44UUD0dQi4o9fu7ONSZ%2BwIJsrkynW2EDbHmJ1htI29sSbu8u8ZvdshcQpO%2B3viDhNfADrXbIp8lr0e3FMWrpcZoEldZRHQmQ0TcQgGw7CEOOQ0vS%2F4BPEm3Fpk41btn3lEEcjFx%2B4bwyIBSK8mlgCbUpmymJniHIimIfygj%2FlGXXQ20hkXs36taT4dgZy%2FdgwF%2B7284D6Gz91WerfzGQcUNTMPBXL58Jw%3D%3D&webInteractiveContentId=127250935564&portalId=4144962" target="_blank" rel="noopener" crossorigin="anonymous">

<img alt="EXCLUSIVE REPORT 150 executives share their views about Visual Asset Leaks in their business" loading="lazy" src="https://no-cache.hubspot.com/cta/default/4144962/interactive-127250935564.png" style="height: 100%; width: 100%; object-fit: fill"

onerror="this.style.display='none'" />

</a>

</div>

Share this article

Subscribe to the blog!

I subscribeWith the irruption of AI generated contents, the phenomenon of disinformation ushers in a new realm, enabling the creation and dissemination of multimedia fake news at an unprecedented scale, speed, and level of realism. The upcoming 2024 EU and US elections will be the first major democratic elections to occur in the era of AI powered deep fakes. The Digital Services Act (DSA), the EU Code of practice on disinformation (the Code) and independent third parties capable of authenticating the provenance of contents are the tools agreed upon by the EU and the platforms.

What makes this fight challenging is the fact that disinformation is not fundamentally illegal. Striking a delicate balance between safeguarding freedom of expression and implementing effective measures to curb the spread and impact of disinformation is essential. This calls for a judicious approach that upholds democratic values while mitigating the harmful effects of false information.

EU institutions are fully aware of disinformation risks and try to tackle the phenomenon on a holistic approach.

The four attack angles of the Digital Services Act against disinformation

On 25 August 2023, the Digital Services Act (“DSA”) came into effect for very large online platforms (Facebook, Instagram, Pinterest, Snapchat, TikTok, X…) and very large online search engines (Google search, Bing).

The DSA aims to combat disinformation from 4 main angles for online platforms:

- faster removal of obviously illegal fake news,

- reduced visibility in recommendation systems for fake news which are not considered as illegal,

- put in place mitigation measures after analysis of their specific risks, to address the spread of disinformation and inauthentic use of their service and

- specific protocols to act against content that may have a real or foreseeable negative impact on public security and democratic/electoral processes and reinforced specific measures in case of crisis, like existing or new armed conflicts, acts of terrorism, or natural disasters as well as pandemics.

The tools required by the EU Code of Practice on Disinformation

In addition to the DSA, the EU Code of Practice on Disinformation (“the Code”) is a first-of-its kind tool through which relevant players in the industry agreed - for the first time in 2018 - on self-regulatory standards to combat disinformation.

The revised Code signed on 16 June 2022 will become part of a broader regulatory framework in combination with the DSA. For signatories - very large online platforms and search engines - the Code aims to become a mitigation measure recognized under the co-regulatory framework of the DSA.

The Code contains 44 commitments and 128 specific measures.

Commitment 20 stipulates : "Relevant Signatories commit to empower users with tools to assess the provenance and edit history or authenticity or accuracy of digital content. Relevant Signatories will develop technology solutions to help users check authenticity or identify the provenance or source of digital content, such as new tools or protocols or new open technical standards for content provenance."

Commitment 22 precises: "Relevant Signatories commit to provide users with tools to help them make more informed decisions when they encounter online information that may be false or misleading, and to facilitate user access to tools and information to assess the trustworthiness of information sources, such as indicators of trustworthiness for informed online navigation, particularly relating to societal issues or debates of general interest. Relevant Signatories will make it possible for users of their services to access indicators of trustworthiness (such as trust marks focused on the integrity of the source and the methodology behind such indicators) developed by independent third-parties, in collaboration with the news media, including associations of journalists and media freedom organisations, as well as fact-checkers and other relevant entities, that can support users in making informed choices. Relevant Signatories will give users the option of having signals relating to the trustworthiness of media sources into the recommender systems or feed such signals into their recommender systems."

In summary, to curb the disinformation phenomenon, there is a need to make available to users transparent trustworthiness tools to assess information and a need to detect and increase visibility of real content emanating from trusted sources with tools capable to guarantee accuracy, integrity and trustworthiness of its provenance.

While it seems crucial to identify AI-generated content to help users find their way around, equal importance should be placed on identifying genuine content from verified and reliable sources, such as news content from press agencies, media or other authoritative sources.

The European Commission has recommended to use tools provided by independent third parties but according to the Transparency Center, these commitments are generally indicated as “not subscribed” or limited by fact-checking initiatives by signatories of the Code.

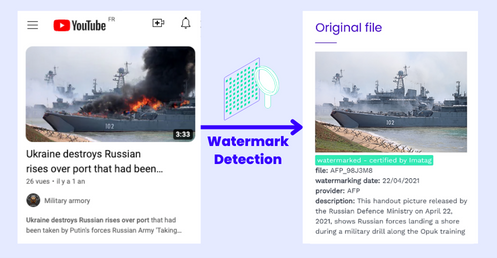

In what ways will the invisible watermark from an Independent Third Party truly address the issue?

The best way to technically certify a content is to watermark it with an invisible signal directly at the source (cf “Google DeepMind’s SynthID and the Imperative of Comprehensive Watermarking”). This technology has been in existence for many years, but has recently seen notable improvements (DeepMind partners with Google Cloud to watermark AI-generated images | TechCrunch ). According to OpenAI as well as Google, a robust watermarking is the best solution to certify visual content. Last month, Google launched its own watermarking system called SynthID. However, a significant limitation is evident: SynthID will exclusively identify Google AI-generated content, produced for example by Imagen, and solely within the Google ecosystem.

This limitation does not exist when using an independent third party able to generate a watermarking for real contents from verified sources, a technology that could potentially be adopted by all online platforms.

Labeling authentic content from trusted sources with a robust and invisible watermark inherently provides multiple advantages for both the public and online platforms:

Restoring trust between users and online platforms

First, restoring trust between users and online platforms can be achieved by labeling real content from trusted sources, in addition to labeling of AI-generated content, and providing users with critical-thinking tools. Users could immediately visualize if it’s AI-generated content, real content, manipulated or falsely attributed real content, or unidentified content.

Second, the ability to visualize genuine content, associated metadata and context of origin would be a major advantage for users and online platforms. Both could immediately identify manipulation or false attribution. Opening a path for immediate deletion by online platforms in cases of clear illegality or visibility reduction within recommendation systems if deemed to be “non illegal” fake news.

Another advantage for very large online platforms and search engines would be to upgrade mitigation measures required by the DSA about the spread of disinformation, and at the same time help developing new strategies to combat misinformation during political and economic crises. Recent elections in Slovakia demonstrated a lack of efficiency regarding anti-fake news tools deployed by some online platforms.

The fight against Infocalypse

Infocalypse usually means the impending false information overload.

On 26 September 2023, major online platforms reported on the first six months under the new Code of Practice on Disinformation.

According to Commissioner Jourova : “Disinformation is still one of the greatest risks to the European democratic information space, including that related to Russia's war in Ukraine and elections. As Europeans will prepare to head to polling stations in 2024, all actors must do their part in fighting online disinformation and foreign interference to protect our online debate. The Code proves to be a useful exercise, but we all have to do more”

The next set of reports are due in early 2024 (with information and data covering the second half of 2023) and should provide further insights on the Code’s implementation. Those reports will also contain information on how signatories are preparing and putting in place measures to reduce the spread of disinformation ahead of the 2024 EU and US elections, while continuing to report on their efforts in the context of the war in Ukraine, to which we can already add the war in Israel.

This is a call for labeling real content from reliable sources by digital watermarking. If this measure is adopted by major online platforms in the near future, one or several of the four horsemen of Infocalypse could fall off their horses.

Want to "see" an Invisible Watermark?

Learn more about IMATAG's solution to insert invisible watermarks in your visual content.

Book a demoThese articles may also interest you

.png)

Authenticity & AI Detection

April 9, 2024

European AI Act: Mandatory Labeling for AI-Generated Content

Authenticity & AI Detection

March 5, 2024

Embedded Watermarking: How Manufacturers Ensure Digital Authenticity at the Point of Capture

Authenticity & AI Detection

March 14, 2024